Here's a brief bio of me that includes snippets of my life.

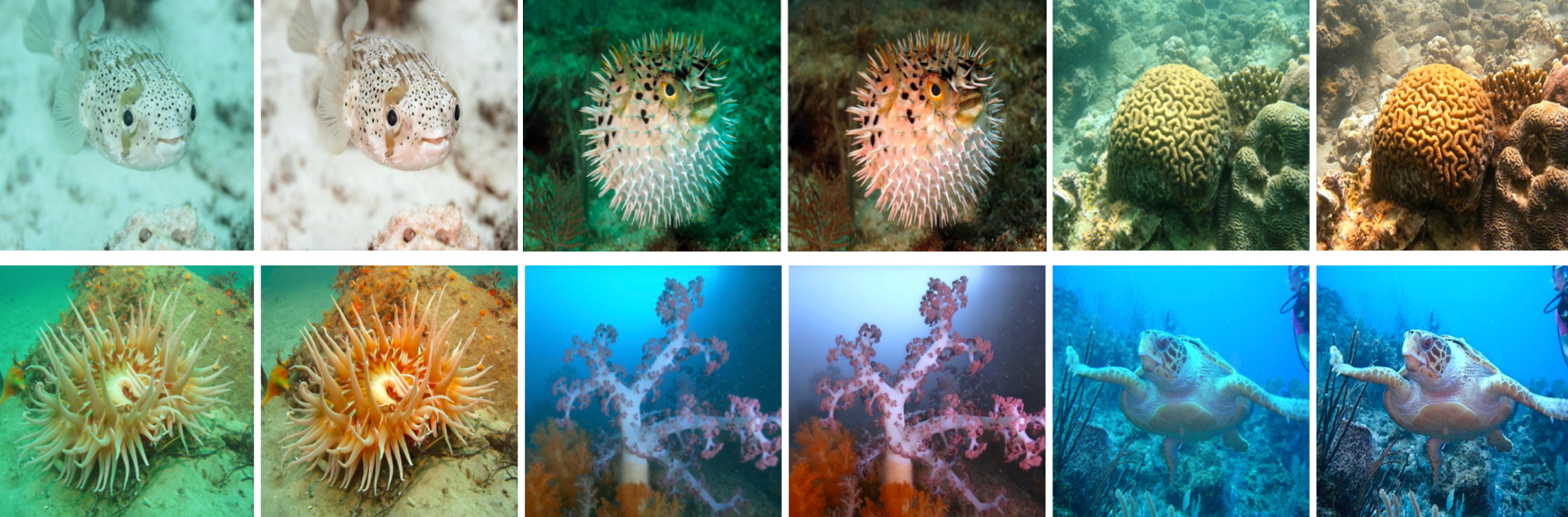

I was born and raised in Seoul, Korea up until middle school, but went to high school in Jeju island-a volcanic island at the southern tip of Korea. I spent a lot of time biking and diving in the waters. Seeing the beauties of the waters made me want to study marine science in college.

A lot changed my first semester there. I took an introductory cs class taught in Snap!(berkeley's version of scratch) with the nudge of a friend and loved it. Maybe it was the combination of having a friend to do fun projects with along with many dorm friends also taking cs classes.

When covid struck I came back to Korea and served in the Korean Navy. I was on a battleship as a boatswain's mate, but towards the end of my service I met a friend who introduced me to ML. It was a happy combination of science and engineering. After my service, I did my first ML project in underwater CV where I turned my distorted diving photos into ones that were clean.

I got into lots of rabbit holes in this era, mostly in computer vision. It was an exciting time where big ideas were coming out each month.

And I started to wonder what it would be like to do these rabbit hole dives full time, away from classes for a while.

In the Spring of 2024, I started my gap year to study things full-time.

I discovered that explanability in approaches is a large quality I value and that it's so much fun making programs go fast.

And this got me into the world of making ML more efficient and faster.

I loved exploring performance optimization of large scale training/inference, all the way from single-chip kernels to multi-chip sharding.

Graduated in the winter of 2025 and started at Google DeepMind.

If any of this interests you, please reach out!

email: ko.hyeonmok at berkeley dot edu

github: henryhmko

The formats in this blog were inspired by Lilian Weng, Simon Boehm, and Fabien Sanglard.