2024 was a year of shaping my taste and being humbled.

I spent most of 2024 doing my gap year from my undergrad. My initial plan was to fully dive into the math behind ML and build intuition on why it works so well. Here's what I thought back when I started:

"...I want to take the following year away from school to reach out to the things I wish to learn with my own curriculum with the goal of developing my depth of knowledge in both science and engineering. I will start from going over the fundamentals again and then branch out to tools and puzzle pieces which I believe will help me tackle larger problems in the years to come…"

- me back in march, 2024

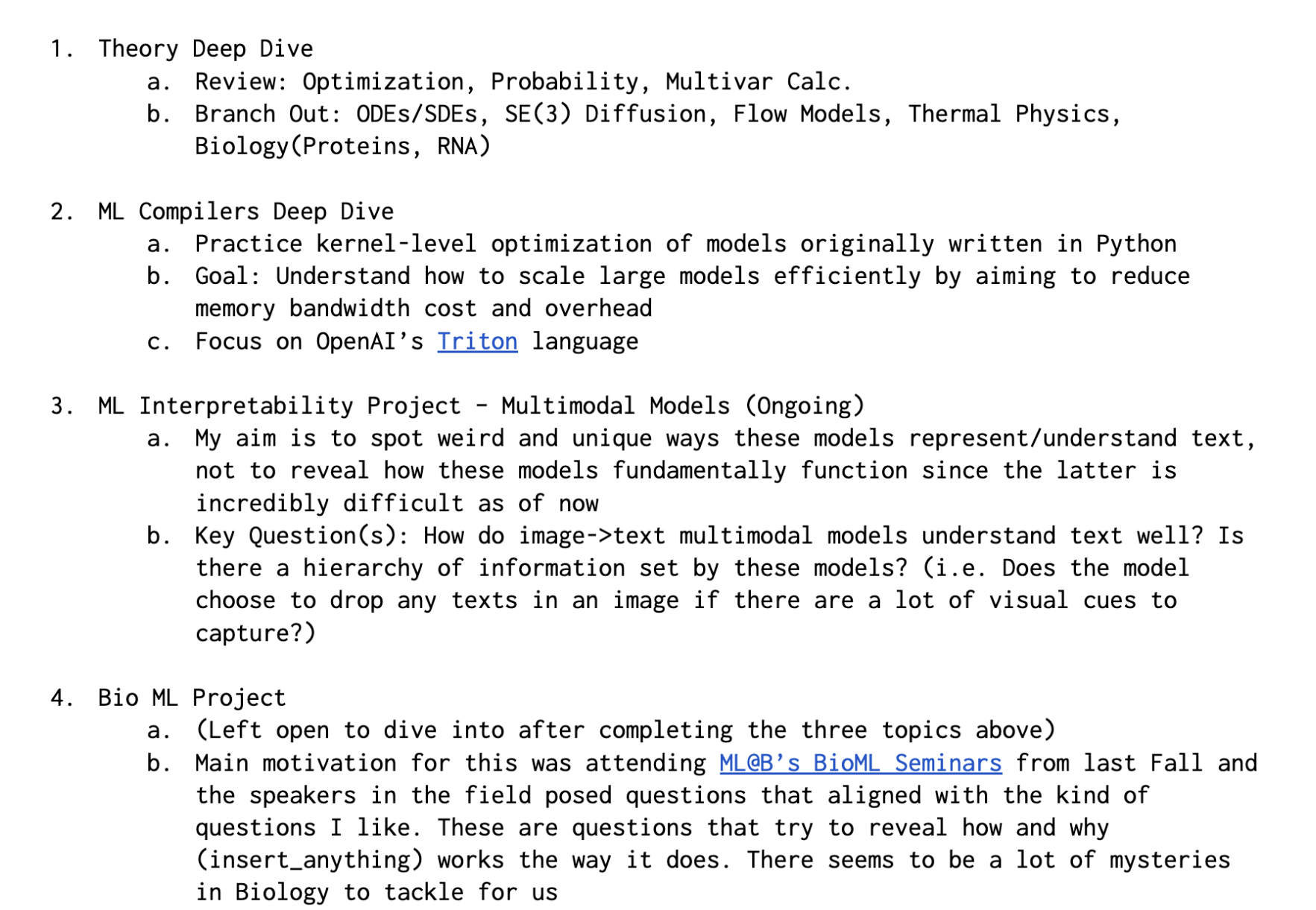

And I mapped out the year ahead like this too:

It's odd but I wanted to take a gap year to study on my own. (There’s another side to this where I was feeling lost and struggling in school, which I talk about over here.)

Looking back, I had these two assumptions deep down:

- My time would be better spent outside of taking coursework that zoom past the content & give tedious homeworks

- I can manage to cover multiple directions(theory, systems, interpretability, bio) in ML if I was just given the time

And now at the end of the gap year, I realized I was rash about these assumptions. Here’s a summary of how my viewpoints have changed.

I realized...

- Multiple directions of research cannot be covered at once, especially when I have weak foundations in them

- Best way to build strong foundations is through in-person coursework

- What felt like “zooming past the content” was actually the professor’s good balance between breadth vs depth to teach what’s practical

- Having a group of friends to work/learn together with is a big support factor

- I should focus on building advanced foundations in practical, hard skills that are transferable rather than niche ones

- good example: algorithms, computer systems

- bad example: SE(3) diffusion

- I like performance engineering a lot

- I love the certainty in progress metrics and clear explainability

I still believe...

- Strong foundations are the #1 factor to tackling larger questions

- (Creating new approaches) >>> (HUGE WALL) >>> (Understanding new approaches)

- And and this jump comes from how strong my foundations in that field are

What did I actually do?

March - April: Review optimization & probability + Paper perusing

One difficult thing from this period was finding the balance between breadth vs depth in terms of review content. Some days I would feel unproductive for going unnecessarily too deep into one very niche topic. And I realized course syllabi actually do a good job managing this balance, especially since not all parts of a thick textbook on X is relevant.

Another thing was that it got lonely quickly. The idea of studying in my own world by myself was less ideal than how I thought it would be.

I wanted to find a team to either study or do projects with.

May - August: Worked in a uni lab project for protein-nucleic acid complex structure estimation

I joined Seoul National Uni's ML lab that was working on a Bio ML project. AlphaFold 3(AF3) came out the week after I joined and the mix of excitement and hints of sadness were apparent in the team.

The sources of sadness were a complicated mix. There was spite for AF3 being closed-source and also some powerlessness in seeing the disparity of compute. But the largest source of discontent seemed to stem from its heavy emphasis on engineering rather than science in the building of AF3.

But I loved AF3's emphasis on parallelization and efficiency. It seemed ironic to me that a large company like DeepMind focused on more compute-efficiency than us, who were very GPU-poor compared to them. That's why most of my time on the project was spent on finding how to make the model more efficient and faster.

This was my first taste of optimization and by the end I got the model to run 3x faster, but the more important part was discovering how I loved the systematic process of identifying bottlenecks and seeing concrete improvements in speed.

The interpretable and concrete side to performance drew me in. I often felt lost in deep learning whenever I couldn’t explain what the model was actually doing under the hood.

September: Hooked on GPU Performance techniques & went through a textbook

Being hooked on performance, I wanted to have a more fundamental approach so I picked up a GPU performance textbook.

But the more I learned, I noticed how methods for performance enhancements are all just based on a good understanding of fundamental computer systems.

I realized that I needed a much stronger base for systems knowledge. And this was when I decided to go back to school since there's good coursework and friends to learn together with.

It was a funny realization to see that I spent a gap year to realize how important coursework is along with having a group of friends to work together with.

October - December: Contract work for DL for audio + Open source for Performance libraries(SGLang & HuggingFace Accelerators) + less ML and more books and movies

These months were more chill and I relaxed a lot. I also travelled to hokkaido, japan.

Gap year conclusions

Going back, I want to build more advanced hard skills in Systems to be good at making things faster and efficient.

I don't have a grand application for this in mind yet. Mostly because the upper limit of whatever application I think of now is limited by the small amount of knowledge I currently have.

I'll put my head down and work. Then I'll begin to see more of what I could do.